The Parachut<e> addresses broader issues of digital pedagogy in the context of an advice column for piloters of the <emma> LMS at Georgia Tech. For those of you who are new to The Parachut<e> and <emma>, you can find a more detailed discussion of both here.

This week’s post evolved from a number of different sources. First, some comments I received from students suggested they might not be familiar with one of the key features of <emma> markup in graded or peer-reviewed documents. Thinking about how to teach students to use <emma>’s feedback tools got me thinking more generally about the issues of feedback and how students use it, which led me to two previous TECHStyle posts by Kathryn Crowther and Leeann Hunter. Katy’s post considers the problem of how we can use tools and time efficiently to provide meaningful ansynchronous and face-to-face feedback on student writing. Leeann discusses the difficulty of getting students to think about their contributions to group projects from a qualitative as well as a quantitative perspective, and explores how lessons learned from “social commerce” might apply when we’re designing collaborative projects.

<emma> has the potential, using various rather nifty bits of data collection, to make the act of marking a student composition meaningful for assessment purposes beyond the immediate moment of individual assessment on that one assignment. While individual instructors mark individual essays, for example, <emma> generates and collects data that can be mined, interpreted, and evaluated in order to help assess that individual student’s progress over the course of a semester, the problems a whole class might be having with an issue such as quote integration, or the success of a program in meeting curricular goals. Drawing on the great insights offered by Katy and Leeann, I started to think about whether it might be possible to design some sort of classroom activity that provides students with additional opportunities for face to face instructor feedback, gets them thinking about their work qualitatively, helps them learn how to use <emma> feedback tools, and turns individual assessment into a learning tool for the class as a whole.

I ended up with an idea for a post-assessment peer review exercise. I haven’t tried this yet, so I am really interested in hearing what you think, including whether it’s even a good idea in the first place. Here are my thoughts on how it might work.

Step One: Mark Student Compositions

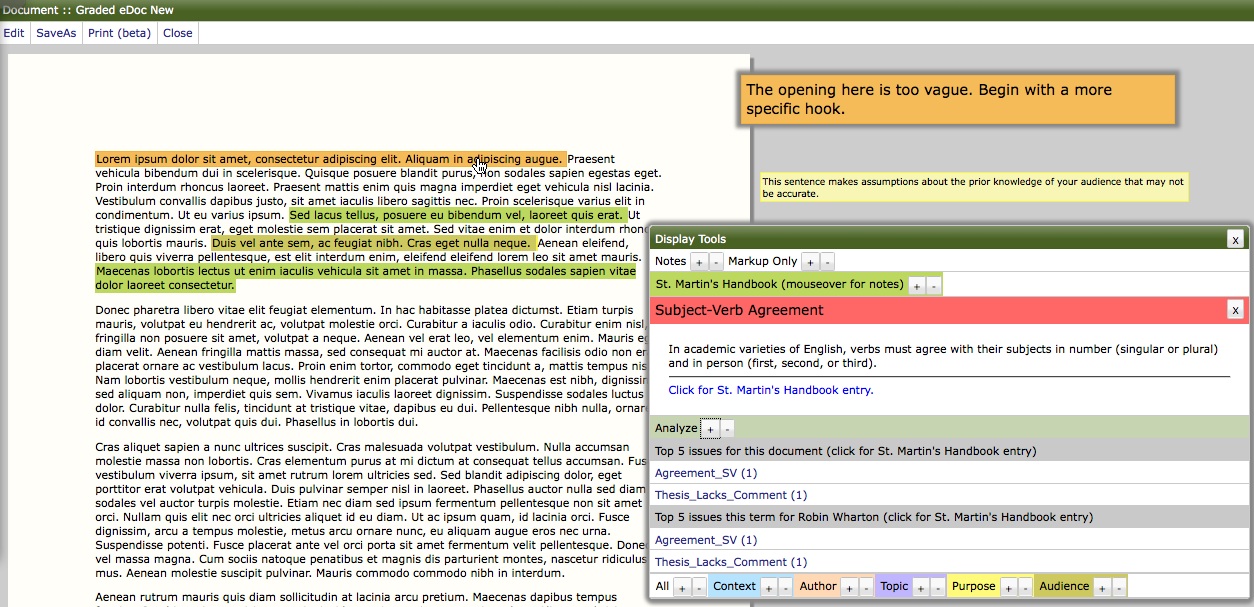

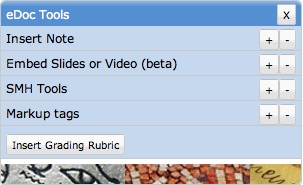

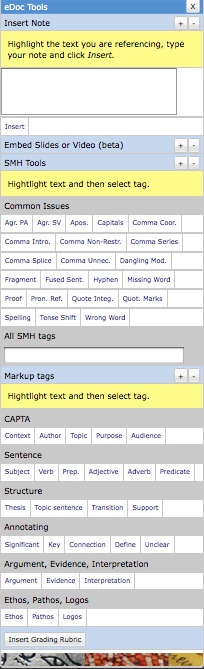

<emma> offers a number of markup tools that instructors can use to mark student writing. So the idea is to use these, however you see fit, to provide feedback on student writing. For eDocs, the markup tools are housed in the eDoc toolbar:

Step Two: Conduct a Brief In-class Workshop

During the first class period after you have released marked essays to the students, ask for a volunteer to have her marked essay “workshopped” by the class. You can take this opportunity to show students how things work, ask students for questions about the feedback they received on their own papers, and perhaps offer a debrief on what you saw as the most common strengths and weaknesses, and maybe even areas of improvement in this set of papers. The exercise doesn’t have to take a whole class period, but it should familiarize students with both the tools and the concepts with which they’ll be working in the peer review exercise.

Step 3: Assign An Asynchronous Peer Review Exercise

Ask students to work in groups of three or four and complete an out-of-class, asynchronous peer review of one another’s marked essays. The exercise could be structured and focused on a single aspect of the rubric, for example asking students to give their peers specific suggestions about how to improve organization. Or it could be loosely structured, asking students to pose questions about the feedback they have received that their peers then attempt to answer.

Step 4: Set Aside Some Class Time for Discussion or Schedule Group Conferences

Using the eDoc Tools, peers and instructors can offer comments and use markup tools to identify errors, and highlight formal or structural features. In the display, mousing over marked elements can call up additional explanation that in turn provides a link to an eText or external web resource.

Once students have completed the out-of-class peer review, you could set aside some class time when they can get together in their small groups and talk to you and their peers. In the alternative, you could suggest that they schedule group conferences if they have additional questions. The idea behind small groups is that maybe you can start a conversation, guided by the feedback students have received and given, in which you are hopefully not the dominant participant.

Your Thoughts?

Obviously, this whole exercise presumes that students probably have an incentive to revise, either for a portfolio or for extra credit. Drawing upon Leann’s ideas regarding student collaboration, I’m wondering if it might even help to give peer review partners extra credit if their reviews lead to a higher portfolio grade or extra credit based on the revised essay. In addition, even if you don’t include letter grades along with your feedback, if you use a rubric, students will have a pretty good idea of the grades their peer review partners received. It takes up class time, so including a post-assessment peer review on a project might mean dropping a pre-assessment peer review along the way.

What do you think? Do you know of any studies that look at the effectiveness of similar kinds of activities? Have you tried anything like this in your own classroom? Please, let us know.

I think this is a terrific idea – I so often feel that I spend hours typing comments that I feel will be helpful and then the student glances at them, decides if s/he is happy with the grade or not, and then tosses the paper aside. It seems like the *best* learning would happen when the students are asked to reflect on the feedback and try to implement it.

I love the idea of getting peers to review the marked papers and give concrete suggestions — would you then have the students revise and resubmit the assignment to be regraded?

Thank you for the feedback, Katy. In order to make this sort of thing work, it seems you would have to offer some incentive for revision. For example, you might require that students revise at least one portfolio artifact. Last semester, I offered extra credit to students who revised portfolio artifacts and discussed the post-assessment revision in their reflections. I didn’t regrade the papers but rather offered 3 points of extra credit on the final grade for the original assignment for “substantial” revision as evidenced in their portfolio reflections for the revised artifacts. In deciding whether students got the 3 points, I only looked at the revised artifacts to check that students had done what they claimed to have done from one draft to the next in the reflections, which is basically how I grade portfolios anyway. A number of students revised, picking up extra credit and substantially improving the overall quality of their reflections in the process.